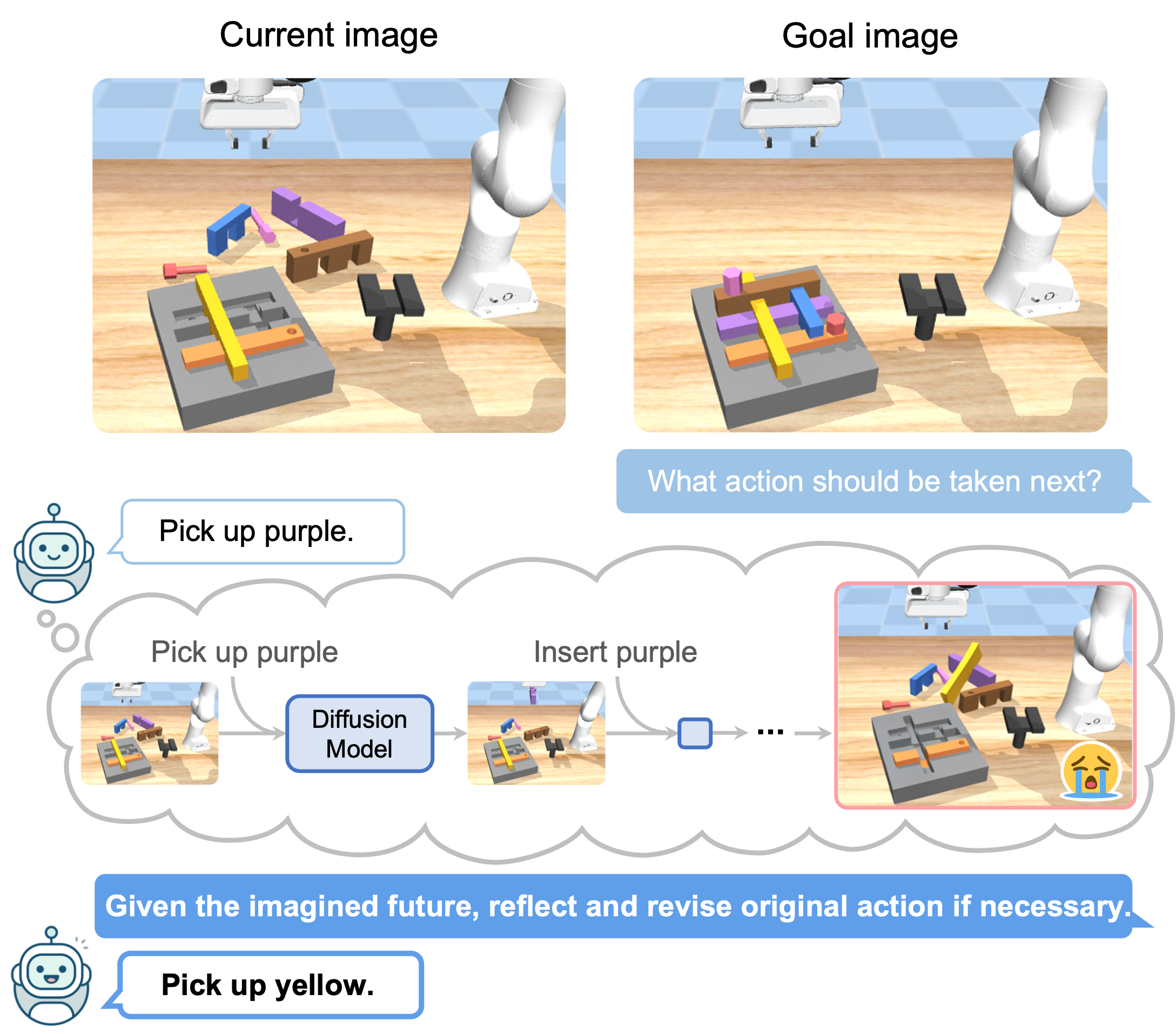

Method Overview

To address the challenges of physical interaction and long-horizon reasoning in multi-stage robotic manipulation, we present a framework that incorporates VLMs with reflective planning. Our approach combines two key components: (1) a diffusion-based dynamics model that enables the VLM to imagine and evaluate future states, and (2) an interactive learning mechanism that allows the VLM to reflect on and revise its decisions based on these imagined outcomes. These components work together to enable more robust manipulation planning while preserving the benefits of pre-trained VLMs.

Multi-Stage Robotic Manipulation Planning Tasks

We procedurally generated a variety of multi-stage manipulation tasks, ranging from simple peg insertion to complex assembly tasks that contain multiple interlocking pieces. Videos below show some tasks examples.

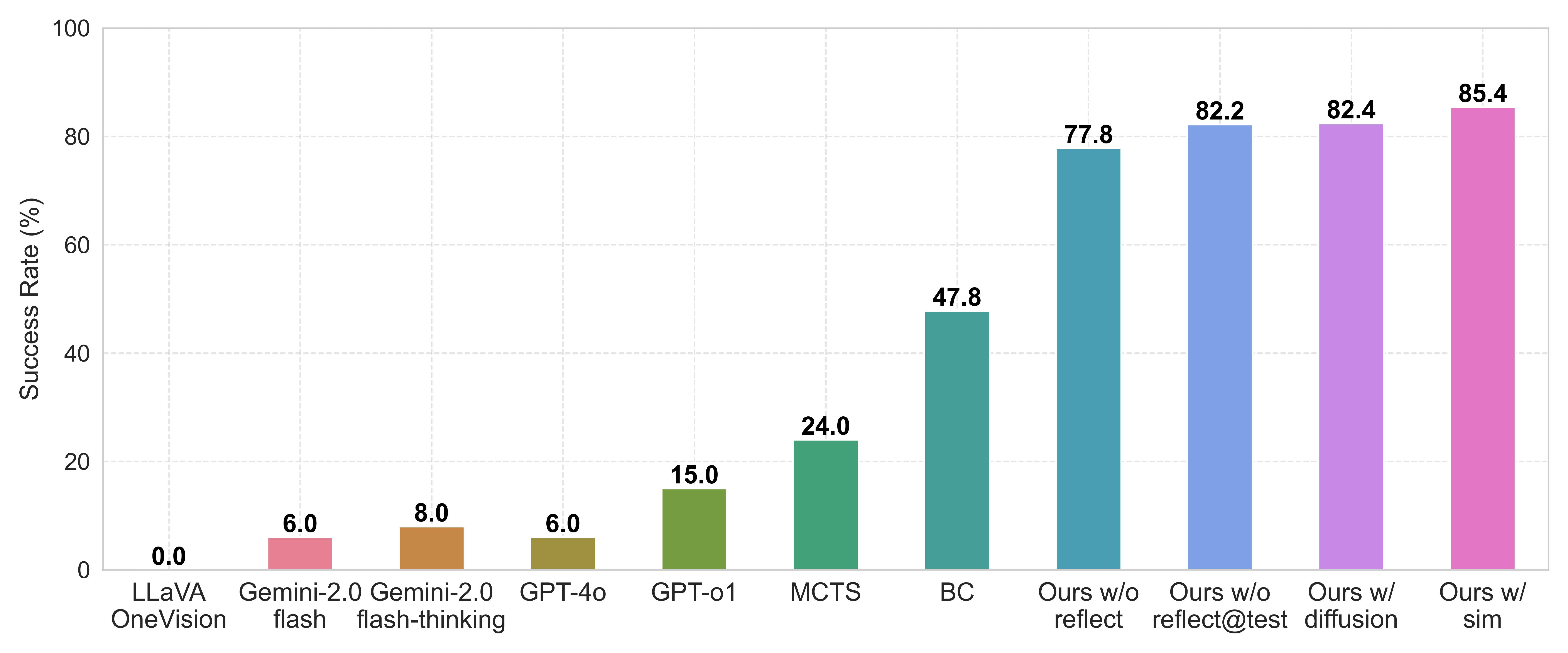

Quantitative Results

Success rate (%) on 100 test tasks. Our method outperforms state-of-the-art commercial VLMs and Monte Carlo Tree Search (MCTS). All tasks are shown in the table below.

| Test Task ID | Initial Configuration | Goal Configuration |

|---|

Qualitative Results

Success cases

Our method is able to solve a variety of long-horizon manipulation tasks with high success rates by leveraging a diffusion

dynamics model to imagine the future state, reflect the initial plan, and propose better action.

Failure cases

Here we show some failure cases of our method. Common failure modes include

incorrect object manipulation sequence and unrecoverable failure of low-level action primitives.

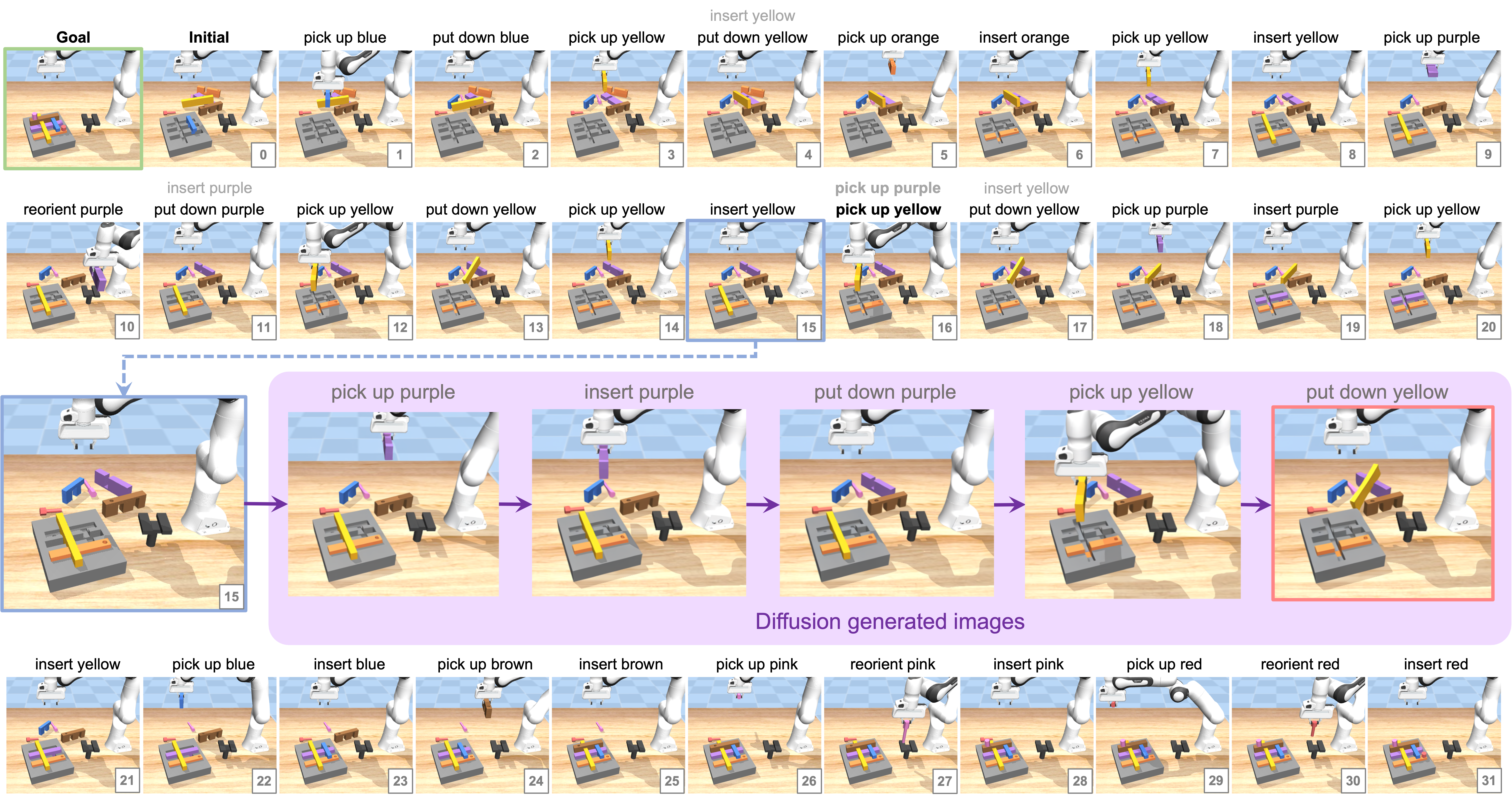

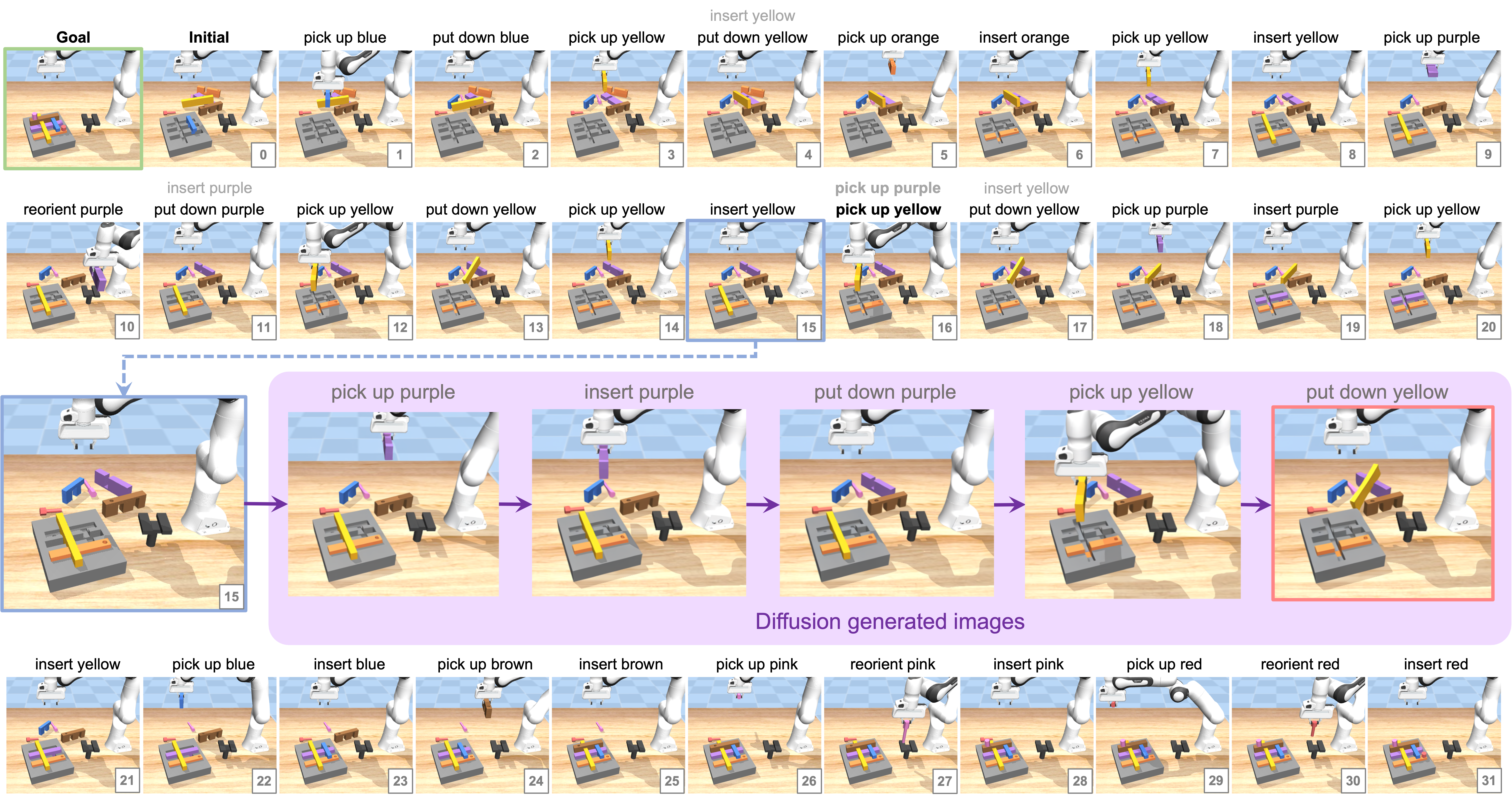

Highlight example

Our method is able to solve a variety of long-horizon manipulation tasks with high success rates by leveraging a diffusion dynamics model to imagine the future state, reflect the initial plan, and propose better action.

Here we show some failure cases of our method. Common failure modes include incorrect object manipulation sequence and unrecoverable failure of low-level action primitives.

Highlight example

A detailed example of our method solving a complicated assembly task. Frames are indexed by timestep. The goal image is in the top-left corner (with a green border). Each frame is the observation after executing the action (in black) above it. The other action in gray is the original action proposed by the VLM if it is revised after reflection. We highlight the reflection process at timestep 15, where the VLM first proposes an action to pick up the purple brick, but after reflection, it chooses to pick up the yellow brick instead as the generated future state (red-bordered image) shows little progress towards the goal. Note that the purple brick cannot be inserted unless the yellow brick is removed.

BibTeX

@misc{feng2025reflective,

title={Reflective Planning: Vision-Language Models for Multi-Stage Long-Horizon Robotic Manipulation},

author={Yunhai Feng and Jiaming Han and Zhuoran Yang and Xiangyu Yue and Sergey Levine and Jianlan Luo},

year={2025},

eprint={2502.16707},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2502.16707},

} 1Cornell University

1Cornell University 2CUHK

2CUHK 3Yale University

3Yale University 4UC Berkeley

4UC Berkeley